Advertorial:

Meet Ability Enterprise Co., Ltd.

Edge computing capabilities have expanded the deployment of AI-enabled smart cameras into various vertical markets. How have these edge AI cameras redefined what video surveillance is and what it can be?

Traditional video surveillance faces a few different problems today. They use legacy hardware from a highly fragmented ecosystem with little to no common software. In addition, such systems do not provide proactive surveillance or actionable insights, and often, are only referred to after an incident has occurred.

Ability offers edge AI cameras that are designed with the developer and integrator in mind. It is a software-defined camera – essentially taking on the functionality defined by the software and the application that is deployed on it.

Furthermore, as Ability’s camera portfolio is fully NDAA (National Defense Authorization Act) compliant, we are an ideal ODM/OEM partner for our customers in the U.S.

Using Intel® Distribution of OpenVINO™ Toolkit, Ability’s AI cameras are intended to be developer friendly and reduces time-to-market. The data acquired from the AI applications deployed on the edge AI cameras can be easily integrated with back-end management systems to drive smart decision making and policies.

There is now market traction on the notion of AI-as-a-Service where revenue generation for businesses do not need to be fully dependent on the sale of hardware and/or software but can be in the maintenance of AI algorithms. This also reduces the burden on the end-users from the perspective of upkeep and financing.

Ability cameras can integrate multiple AI algorithms to run simultaneously on the edge. Can they be customized for Smart City applications?

Powered by the Intel® Movidius™ Myriad™ X VPU, the programmable Ability edge AI cameras allow developers to port and run image centric neural networks on the edge cameras alone. Using top of the line image sensors from Sony, from 2MP to 8MP with various lens combinations, the cameras can address a range of practical use-cases in Smart Cities, such as traffic monitoring, smart buildings, security and assistance, and crowd monitoring. It also offers the best-in-class IP and IK ratings to withstand the harsh environments expected in these environments.

More importantly, the Intel® Distribution of OpenVINO™ Toolkit enables developers to easily take AI models from its Public Model Zoo repository for quick demonstration, or to port their own proprietary models and achieve the best performance goals. For instance, a traffic monitoring system may require models including vehicle-type detection and license plate recognition to run concurrently. In another example, a crowd monitoring system may require face detection, body detection and object detection.

It is also important to underscore the value of a developer-friendly device. As edge AI cameras are being developed, continuous learning is a natural outcome, and the ability to provide iterative improvements will be essential.

How does Ability’s AI-enabled video solutions impact the retail market?

The Ability camera allows for inference logic to be performed directly on the camera. For retail users, this creates efficiency on multiple fronts:

- Operational: Fewer logistics, as cameras do not need to be complemented by companion computer.

- Economic: An in-store retail camera network can be achieved more economically because the equipment needed to accomplish the task is reduced by about 40%. This is due to the diminished need for CPU/computers because processing of inference logic is done on board the camera. This also creates significant savings on bandwidth. Examples of algorithms/inference logic that can run on the cameras include people counting, demographics analysis, face detection, and dwell time.

In retail, cameras are used for operational visibility and for brand monetization. Cameras can also be used to measure the performance and audience measurement for digital signage – a new source of potential revenue for retailers.

Jay Hutton, CEO of VSBLTY Groupe Technologies Corp. has also commented “Computer vision is uniquely equipped to anonymously measure audiences in physical spaces. Retails is evolving into a powerful and valuable media channel. Computer vision is critical to this as it is only through computer vision that consumer engagement can be measured in real time effectively. Our proven ability to run computer vision directly on the camera changes the unit level economics for this market.”

As a Microsoft AEDP partner, Ability can provide solutions to enable Microsoft Azure’s partners to expand its services into the retail segment.

Learn more: www.youtube.com/watch?v=tTGwpdEJnkI

How can Ability’s AI-enabled video solutions be implemented and deployed in smart traffic applications?

In the case of smart traffic, it is extremely important that the video surveillance hardware system is reliable and robust – something Ability addressed with no issues; however, some scenarios present a unique problem in smart traffic applications.

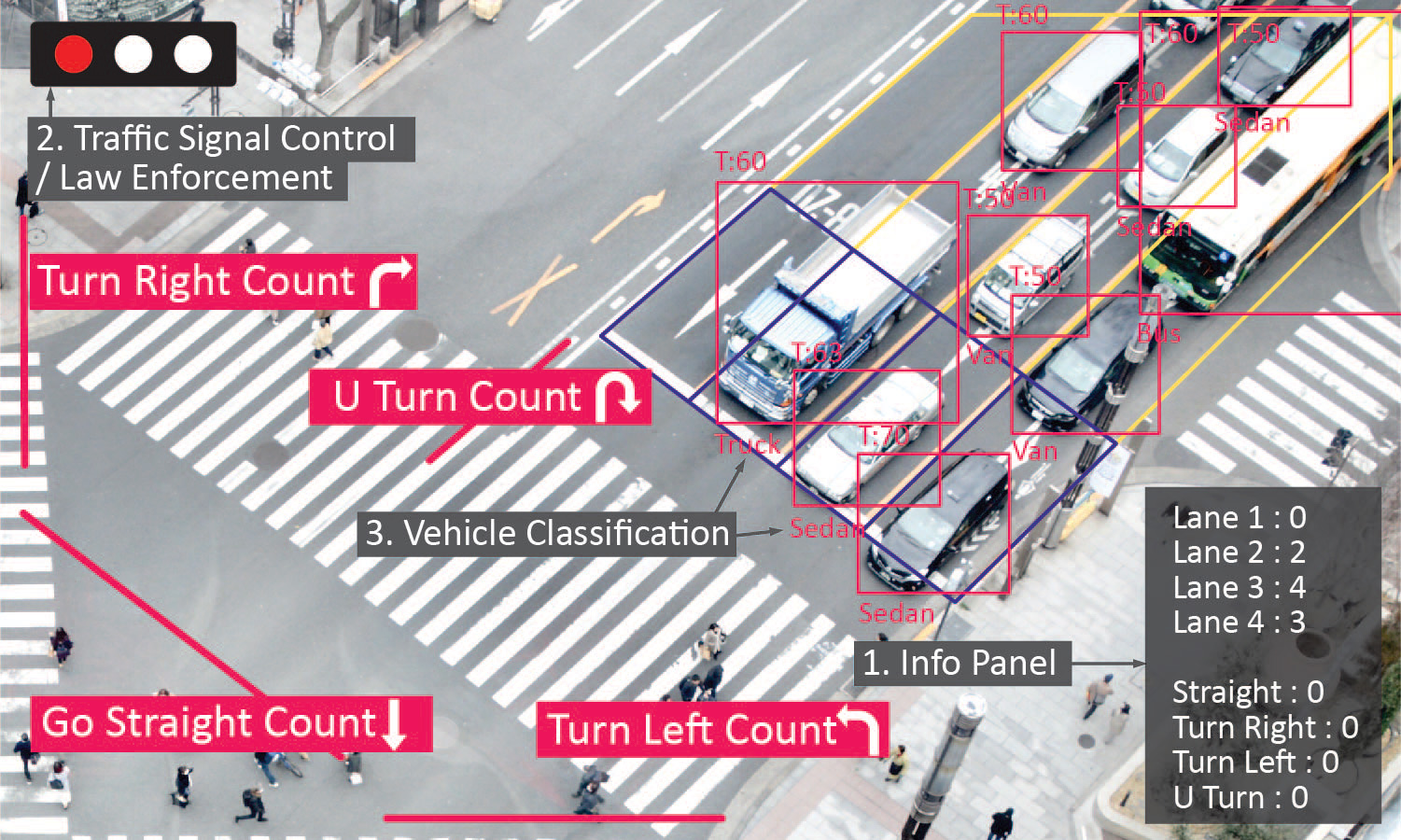

As illustrated in the photo above, Ability cameras and analytics provided several benefits for city traffic management, including:

- Vehicle classification, counting and tracking;

- Waiting time calculation;

- License Plate Recognition (LPR);

- Illegal maneuver detection;

- Journey time calculation; and

- Incident detection.

Another consideration is pace of the market. With continuous learning and iterative improvements, a cornerstone of a successful AI deployment, the Intel® Distribution of OpenVINO™ Toolkit models available in the Public Model Zoo and the AI framework’s support for easy Over-The-Air (OTA) updates via SSH or HTTPS ensures users can easily deploy and update the appropriate AI models for their unique use-cases.

Ability is currently working with another technology partner in the deployment of a surveillance network in Mexico City. How is this a model Smart Cities project from both a design and use case perspective?

VSBLTY Groupe Technologies Corp., a leading provider of security and retail analytics technology, and Ability Enterprise Co., have co- developed a first-of-its-kind high resolution camera with self-contained inference logic, which can be used in retail and “Smart City” applications. The Ability intelligent cameras, powered by VSBLTY technology, are currently being deployed to improve public safety in Benito Juarez, a borough of Mexico City.

Deploying inference logic directly on cameras is an excellent way to provide for AI capability economically for one of the world’s most dangerous cities. Additionally, layering AI logic on top of the existing camera networks and adding smart cameras was an excellent way to increase the reach of the network.

Jay Hutton, CEO of VSBLTY commented: “When we were introduced to Ability by Intel, we immediately understood the enduring commercial value of running inference logic directly on the camera. We believe the future will be characterized by AI getting closer to the edge, and our work with Ability is a great example of that. It gives us an important competitive differentiation.”

What is the future of AI-driven video surveillance as technology advances and applications expand?

The future of AI-driven video surveillance is bright; in fact, it will likely change the context of how the words “video surveillance” are used.

In addition to retail and smart traffic, Ability is engaging with partners to address aspects of public safety (for example, zoning, construction sites); in healthcare and fitness (for example, patient well-being); just to name a few, with more to come.

While these examples are fundamentally part of the function of video surveillance, we believe that the technology will be deployed in such a ubiquitous manner that the public will see it as a form of public assistance – a tool that is intended to improve people’s quality of lives.

The future is not edge-only or cloud-only, but a combination of both. The notion of a collaborative edge-cloud video analytics framework has been introduced by some of the world’s largest solution providers.

Successful systems integrators will be able to balance the processing load most effectively between the edge and the cloud for the best user experience possible. A combination of flexible deployment through distributed processing, reduced latency through edge processing, and cost optimization will drive the decision-making process.

To learn more about Ability Enterprise Co., Ltd., please visit www.abilitycorp.com.tw/html/product_system.php